In each conflict that Israel is embroiled in, and with every bombing campaign on Gaza, people share images, videos, posts, and testimonials that document the details and stark realities of the aggression. This is especially true with content that is not featured by mainstream news outlets, either due to political agendas, or in compliance with the international laws and ethical standards governing media broadcasts, which prohibit showing images of individuals in distress, as well as graphic content that showcases lifeless children, injured individuals, or human remains, which are all images that frequently surface from the Gaza Strip during these attacks.

And every time, Facebook posts in the Arabic language begin circulating with statements like: "My account has been banned because of my last post," or "My post has been removed by Facebook," or “Watch this video before it is deleted,” or the most famous phrase during these times: "Damn you, Mark."

Once an individual creates a Facebook account, they implicitly accept its terms of use, conditions, and the regulations set by the platform. These rules and regulations forbid the dissemination of "prohibited content, including hate speech or any form of incitement to violence". The question is whether these guidelines and restrictions apply equally and with the same precision to all languages and political inclinations .

"Yes, I feel that Facebook is biased against us"

Tabarak Al-Yassin, a Palestinian writer and artist residing in the city of Zarqa, Jordan, says, "My Facebook account was banned twice during Operation (Lions' Den) due to my sharing of videos featuring martyrs and their funerals."

When asked by Raseef22 whether she perceives the bans as stemming from bias or the presence of violent content, she responded, "My feeling is that Facebook is biased against us. Facebook allowed us to post and share images that, when shared with the world, would garner sympathy for our enemy. For instance, photos of Israeli prisoners are allowed, but in contrast, sharing images of Palestinian martyrs is prohibited."

Is this bias deliberate?

To address this question, we asked Mona Al-Ghanem, a digital marketing expert working for a major firm in New York, whether Facebook and Instagram exhibit partiality toward one side or the other in the Israeli-Palestinian conflict. She provided the following perspective: "There is something more important than my own opinion, and that is the data presented in reports by Meta itself."

"Facebook is biased against us. It allows us to post and share images that, when shared with the world, would garner sympathy for our enemy, such as photos of Israeli prisoners, but sharing images of Palestinian martyrs is prohibited"

Al-Ghanem underscores that Meta, the parent company of Facebook and Instagram, had enlisted an independent consultancy firm in 2022 to scrutinize the social responsibility of its platforms. She adds, "The central question that this pivotal report must address is whether bias exists. Is this bias deliberate or unintentional? The report has explicitly contended that the bias is indeed deliberate."

Al-Ghanem quotes the following paragraph from the report: “Meta’s actions in May 2021 appear to have had an adverse human rights impact… on the rights of Palestinian users to freedom of expression, freedom of assembly, political participation, and non-discrimination, and therefore on the ability of Palestinians to share information and insights about their experiences as they occurred."

In conclusion, the report recommends that Meta needs to employ more professionals who are familiar with Arab culture and are knowledgeable about the contexts of events.

What constitutes violent content and hate speech?

Violent content on social media platforms encompasses any form of content that incorporates elements of violence, encourages violence, or references violent actions in a manner that raises concerns against individuals or groups. These concerns are often related to factors such as race, ethnicity, religion, or gender.

Hate speech, on the other hand, refers to verbal or written expressions intended to promote hatred, incite discrimination, or stimulate violence against a particular category of individuals or groups. These expressions are based on specific factors, including race, religion, gender, national affiliation, beliefs, culture, or political or social beliefs.

Official report: “Meta’s actions in 2021 appear to have had an adverse human rights impact.. on the rights of Palestinian users to freedom of expression, freedom of assembly, non-discrimination, and the ability of Palestinians to share their experiences”

Qassem Ahmed, an architect working in Amman, expressed his confusion, stating, "I don't understand how we can discuss war news without addressing hatred and violence. Aren't hatred and violence the driving forces behind wars and conflicts? Hatred as a motive and violence as a tool?""

Qassem shared with Raseef22, "Personally, I engage more with Gaza news on Instagram and TikTok than on Facebook. I feel that the news reaches me faster with less scrutiny. I might be mistaken, but a picture is worth a thousand words, as they say."

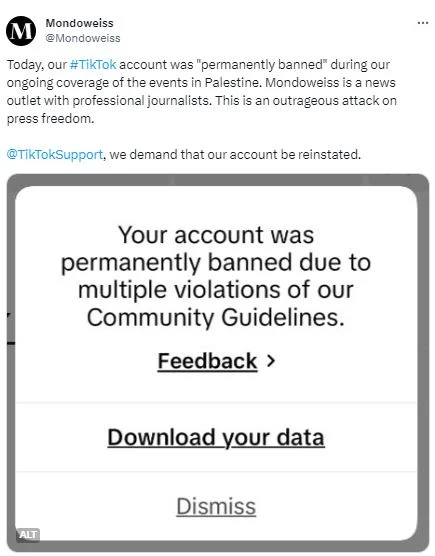

However, Qassem might be mistaken, as Instagram, like Facebook, adheres to policies concerning the sharing of violent images and videos or content that incites hate speech, as they are under the same parent company. As for "TikTok," which is banned in Jordan, the platform has deactivated many accounts that support the Palestinian cause. Most recently, the news platform "Mondoweiss", specializing in the Israeli-Palestinian conflict, had its account on TikTok banned. They posted the ban announcement on their "X" platform.

Social Media platforms ban content and suspend accounts covering the Israeli aggression on Palestine, while keeping harmful pro-Israeli and anti-Palestinian posts inciting hatred and violence

Social Media platforms ban content and suspend accounts covering the Israeli aggression on Palestine, while keeping harmful pro-Israeli and anti-Palestinian posts inciting hatred and violence

Who decides who is the victim and who is the culprit?

To distinguish between hate speech and peace advocacy during conflicts, one must answer a fundamental question: who is the victim, and who is the culprit in the conflict from the platform's perspective? In this case, the victim can publish images proving their persecution they are undergoing, while the alleged criminal or "terrorist" side cannot share the same images because they would be deemed violent and graphic.

Some individuals working within the Israeli cybersecurity unit, who also serve as volunteer content moderators for Facebook, hold the power to delete content and issue bans without needing to go through routine procedures.

Specifically for this purpose, and because social media platforms rely on what is called “machine learning”, the Israeli government established the “Cybersecurity Unit.”

This unit regularly submits requests to Facebook to remove content and posts that contradict its narrative. A report published by the Israeli Ministry of Justice in 2018 reveals that some individuals working in this unit also volunteer to monitor and oversee content on Facebook, for the platform itself. This means that they do not need to go through the lengthy content removal procedures that other users are required to follow.

Aseel Odeh, an artificial intelligence software developer, underscores the implications of such cooperation, that Facebook allows from some parties, which may not be in favor of freedom of opinion and expression. She explains, "Facebook is the sole entity responsible for restricting and directly interfering with content, but there is indirect restriction from some governmental entities through requests to restrict any content that violates their laws, or from business partners, or due to the presence of groups and institutions that organize campaigns to report specific content. Typically, this results in Facebook responding to these reports, deeming the content in violation of their standards. But the good news is that both Facebook and TikTok, like all technology companies, publish transparency reports that discuss content standards, criteria, and policies based on user feedback and government requests."

Samia, a journalist from Cairo, had a different experience. After encountering an Israeli account advocating for the complete destruction of Gaza, she reported it to Facebook. She recalls, "I came across an Israeli account distributing laughter emoji reactions and 'hahah' comments on posts providing the number of victims on the Gaza Health Ministry's facebook page. I was intrigued enough to enter the account, thinking it was a troll account. But then I found a post explicitly calling for the 'annihilation of Gaza'. I tried to report it to Facebook because this was blatant hate speech and incitement based on national origin." She was shocked to discover that this feature was not available for this account or its incendiary post. She explains, "Facebook blocked me from posting content or cynical comments multiple times, claiming it was hateful, while this unjust hatred is not just allowed for Israelis, but also people can't even report it."

She adds, "After three days, Facebook responded, stating that the post calling for the annihilation of Gaza and its inhabitants did not violate its standards."

Social Media platforms ban content and suspend accounts covering the Israeli aggression on Palestine, while keeping harmful pro-Israeli and anti-Palestinian posts inciting hatred and violence

Social Media platforms ban content and suspend accounts covering the Israeli aggression on Palestine, while keeping harmful pro-Israeli and anti-Palestinian posts inciting hatred and violence

The human element in play

Odeh tells Raseef22, "First and foremost, we must grasp the following: Facebook has never revealed the type of its algorithms, how they function, or the specific policies it adheres to." She emphasizes that algorithms, by themselves, do not exhibit bias; rather, any perceivable bias is a consequence of human intervention – specifically the developers – feeding them with biased data.

Experts state that algorithms, by themselves, do not exhibit bias; rather, any perceivable bias is a consequence of human intervention, feeding them with biased information and causing all this harm.

Odeh goes on to explain, "From the outset, data was cherry-picked to bolster a particular viewpoint, selectively identifying sources. When it comes to the conflict with Israel, the Israeli narrative was embraced without due consideration for opposing viewpoints. This was achieved by employing 'supervised learning' data, which entails defining and classifying acceptable speech types, and rating each perspective."

Odeh clarifies that ultimately, it is the humans who exercise control over algorithms through direct instruction and training. What happens is that the human team teaches the algorithm on how to approach certain sensitive subjects in accordance with their set criteria.

She adds, "With regard to keywords, their mere presence cannot be a reason for a post ban or removal. The algorithms make an effort to comprehensively analyze the entire text to grasp the broader context. Hence, we may find that the inclusion of a term like 'Palestine' in a non-political text is deemed acceptable and does not violate their standards. However, words such as 'Hamas' and others are categorized as rule-based AI."

Censorship is even worse beyond Facebook

Censorship and bans aren't confined to social media platforms; what happens on Facebook does not stay on Facebook.

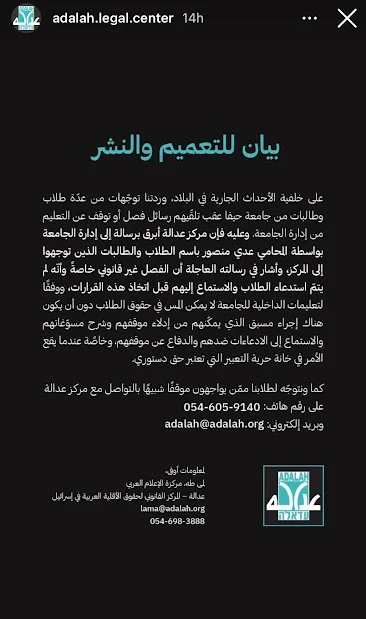

On October 8th, the Adalah legal center, headquartered in Haifa, issued a statement condemning the University of Haifa for expelling several students due to their social media posts concerning ongoing events.

In the nearby city of Taibe, the Magistrate Court in the city of Petah Tikva extended the detention of a young woman for three days. This action was taken in response to her Facebook posts, which were deemed as "supporting Palestinian factions in Gaza," coinciding with the commencement of the "Al-Aqsa Storm" operation.

These arrests aren't a novel development. Facebook frequently serves as the primary catalyst for handing over individuals with opposing views or those not aligning with the general policies of countries of the Middle East. If Facebook doesn't impose bans, their respective governments may resort to imprisonment, and Israel is no exception, despite its assertions of being the only democracy in the Middle East.

Social Media platforms ban content and suspend accounts covering the Israeli aggression on Palestine, while keeping harmful pro-Israeli and anti-Palestinian posts inciting hatred and violence

Social Media platforms ban content and suspend accounts covering the Israeli aggression on Palestine, while keeping harmful pro-Israeli and anti-Palestinian posts inciting hatred and violence

Outsmarting Algorithms: Is it feasible?

Ghanem asserts, "Facebook takes the top spot as the worst enforcer of censorship, and Instagram, following the same policy, comes in second. Then there's X (formerly Twitter), which exercises censorship to a lesser extent. All of these platforms run counter to the fundamental right of freedom of thought and expression." She advises users to focus more on written content over images and videos, given that visual posts are more swiftly detected by algorithms. Moreover, it's imperative to employ hyphens or divide words to elude algorithmic detection. For instance, a person can write "Isra-el" or "Isr..ael" instead of "Israel".

Journalist: "I tried to report an Israeli account that was calling for the extermination of Gaza, because it promotes hate speech and genocide based on nationality. Not only was the report denied, but I discovered that reporting such an account is forbidden."

She goes on to say: "I recommend that anyone with Instagram accounts, access their settings and switch the content sensitivity from 'Sensitively Content' to 'More.' This will unveil numerous prohibited contents, which, these days, are usually related to Palestine. It's also essential to refrain from simply copying and sharing a single text in campaigns, as this is the easiest way for the content to be identified and removed, or the account to be deleted. Additionally, avoid using too many hashtags; one hashtag is adequate."

The above closely resembles the approach taken by the writer, Tabarak Al-Yassin, to avoid having her account banned. She mentions, "I attempt to circumvent this as much as possible by inserting punctuation marks within a single word that I feel may lead to the closure of my account."

In this context, Odeh points out, "Text-based images are treated as text, but images are consistently rejected, especially if they are graphic and violent. I don't think there is any bias in this matter. Posting images of Palestinian flags, Hamas flags, or Israeli flags does not result in a ban, unlike images of casualties. This is another indication that bias is determined by the use of certain words."

She concludes, "According to platform policies, there is no explicit explanation for rejecting Palestinian or Israeli content. Nevertheless, several considerations are taken into account before restricting accounts, including the type of content, the pages the user follows, and the interactions of others with them. While there's no clear statement regarding the use of geographical location within these considerations, observations suggest that Facebook imposes more restrictions on individuals inside Gaza and the West Bank compared to users outside these regions, even if the content is similar."

"In platform X (formerly Twitter), there is no classifier for the Hebrew language. This leaves room for any harmful content to be spread without any repercussions, which is the complete opposite of how the platform handles the Arabic language"

Is Hebrew content subject to the same restrictions?

However, proving bias is difficult unless it turns out that posts in the Hebrew language or those advocating for Israel face the same censorship for hate speech and violent content. On this matter, Al-Ghanem states, "The report presented by Meta's social responsibility consulting company last year clearly states, through data-driven analysis, that posts supporting Palestine that get deleted significantly outnumber the deleted posts in favor of Israel. Furthermore, the number of deleted posts in the Arabic language is much higher than those in Hebrew."

Raseef22 reached out to Nadim Nashif, the General Manager of 7amleh - The Arab Center for the Advancement of Social Media based in Haifa, and inquired whether Israelis face the same treatment from Facebook, X, and Instagram. He responded, "Certainly, social media platforms do not apply sanctions evenly on both Palestinian and Israeli sides, and there is a clear and unmistakable bias in favor of Israel."

He adds, "In recent days, we have witnessed a significant increase in digital rights violations amid the current political situation. On the "7or" platform alone, we have documented more than 259 complaints since the events began on October 7th. These complaints encompass 99 violations that involve the suspension and restriction of accounts supporting Palestine and the removal of supportive content, along with 160 violations that include hate speech and incitement, primarily in the Hebrew language."

When asked to explain the higher number of deleted Arabic posts compared to Hebrew on social media platforms, he responds, "For many years, there was no Hebrew language classification at Meta. The classification being an algorithm that categorizes content into specific topics. Facebook added this only a few months ago due to the pressure to incorporate a Hebrew language classifier following the May 2021 grant. In contrast, there has been an Arabic language classifier that has been working on content management since 2015, confirming the disparities and bias in content management between Hebrew and Arabic."

A neutral report confirms, "The number of deleted posts in Arabic significantly surpasses the number of removed posts in Hebrew."

He also refers to the report titled "An Independent Due Diligence Exercise into Meta's Human Rights Impact in Israel and Palestine During the May 2021 Escalation", which was issued by the Business for Social Responsibility (BSR) organization. The report highlights a discriminatory distinction in content management between Arabic and Hebrew within Meta.

He further adds, "Regarding X (formerly Twitter), there is no classifier for the Hebrew language. This leaves room for any harmful content to be spread without any repercussions, which is the complete opposite of how the platform handles the Arabic language." Odeh attributes this to the owner of the company, Elon Musk, who has repeatedly expressed his political favoritism toward Israel.

Raseef22 is a not for profit entity. Our focus is on quality journalism. Every contribution to the NasRaseef membership goes directly towards journalism production. We stand independent, not accepting corporate sponsorships, sponsored content or political funding.

Support our mission to keep Raseef22 available to all readers by clicking here!

Interested in writing with us? Check our pitch process here!